God, Groundhog, LLM, PLM

Back in the 1500s, Europe was a peculiar place to inhabit. Loyalty was still less to the nation-states, and more towards particular princes and nobles. God was a truly serious matter, with various denominations happily slaughtering each other over conflicting interpretations of the host of sacred books. Secretive artisan guilds tightly controlled manufacturing and key trades, spreading their know-how exclusively via personal interactions between a master and an apprentice.

Fast forward to the present times there is an eerie resemblance of 1500s in the air.

Manufacturing has become simultaneously extremely specialized and increasingly dependent on extended supply chains, unpleasantly exposed to natural events and whims of unfriendly actors.

Western society and public policies are permeated by various radical theories, deflecting attention (and affection) away from science, education, and hard work and towards social, gender and racial justice “issues.” At the same time, those grumpy technical experts are retiring en masse, while the new generation is unable (or unwilling) to build up their expertise fast enough and in sufficient numbers.

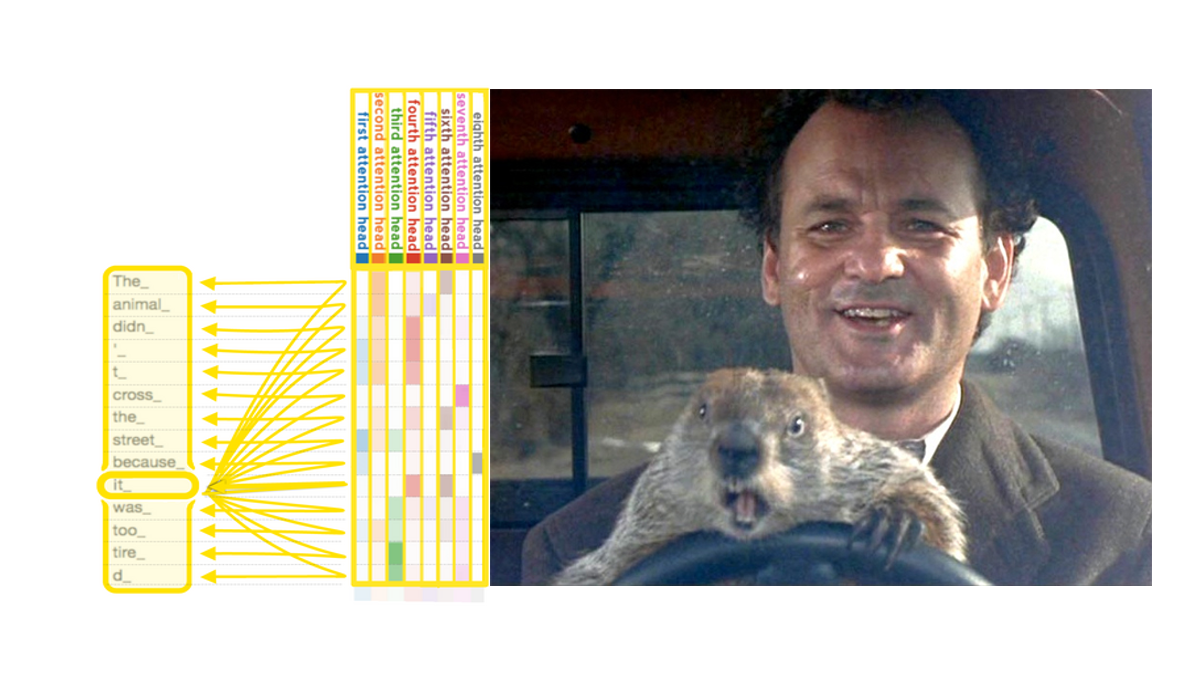

Funnily enough, we rarely speak about God nowadays – we actively debate LLMs like ChatGPT instead. These algorithmic creatures demonstrate ever-increasing capabilities to access and manipulate multiple data types over the huge datasets, producing near-human or even above-human results, causing both awe and fear.

Compared to God or LLMs, an individual human’s knowledge is rather small, fragmented, and sadly fleeting. The solution has always been in gathering and sharing persistent knowledge within a team and, in the engineering and manufacturing context, within enterprise systems.

Speaking of persistent knowledge, in the “Groundhog Day” masterpiece, Bill Murray‘s protagonist Phil transforms from a lost and angry soul to a semi-divine being when he ingests all possible information about outcomes in his containerized Groundhog-ruled universe. Armed with infinite time cycles and resets, he learns languages and music. Evidently, he also becomes more physically capable: it is not enough to know playing piano, there is also a certain level of finger dexterity required to do it well, as he demonstrated beautifully in the end of the movie.

The insurmountable gap between Phil and other people in the container arises from their difference in accessing previously accumulated memories, which include both knowledge and physical capabilities. Other people’s memories in the movie start unchanged from a specific persistent foundation. They accumulate data normally over course of that fateful day into a non-persistent database, and they roll back to the foundation on restart. In contrast, Phil’s accumulated memories are continuously stored in a persistent database, and he reconnects to it at the sound of the morning alarm clock.

Importantly, Phil doesn’t have to do or say precisely the same things at any particular junction. Just like machine learning and semantic search, Phil may use synonyms or perform comparable physical actions to achieve the same or similar outcomes.

Phil evolves from lowly pleasures via nihilistic self-destruction towards self-improvement and universal God-like benevolence. The end product of that process is a much more capable and content human, and a much safer and happier universe around him.

The biggest unanswered mystery in the movie is about the source of all that commotion. Yet, we can confidently say there is the already mentioned persistent memory, in combination with a training mechanism in place.

Pay attention: through the entire movie Phil both interrogates and affects his reality predominantly using pretty normal conversations. Just like LLMs, which essentially elevate common English into a fully-fledged programming language.

The productivity implications of LLMs for the engineering and manufacturing worlds are enormous. Engineering and manufacturing worlds rely on a specialized personal knowledge, efficient team collaboration, and overwhelmingly depend on the transactional history stored in a multitude of systems like PLM, ERP, and MES. LLMs reading through these transactions with ease and speed, and sharing the information across the team, can play the role of the same 1500s Master Craftsman leaning over the shoulder of every current and future apprentice (and all future Masters) at the same time, advising, correcting and alerting if necessary.

We experimented with many LLMs over the last year, focusing primarily on ChatGPT and Google Bard, and we have developed a good understanding of what they can and cannot do for Engineering and Manufacturing in the short to medium term.

So far, we have identified a dozen of processes which can benefit from LLMs in this domain now, although I will restrain myself from listing them all, for purely humanitarian reasons.

We identified a solid connection of LLMs to impact analysis and cost estimates, which may re-energize ECO processes and supply chain management. We already see an enormous value in using LLMs to assist engineers with navigating a nightmarish haystack of standards – corporate, MS, AS, MIL, AN etc.

We also took this to the hands-on world with impressive results. For example, we developed a method to unleash a ChatGPT-based product on a large and “dirty” standard parts database. We were quite amazed by the ease and flexibility of training, re-training and subsequent efficiency of the engine. It is now possible to quickly search and find relevant data in what used to be muddy waters.

Important note: LLMs are inherently statistical, and therefore they are always slightly unpredictable; hence they cannot (yet) be put directly at the steering wheel. They also often need specialized plugins to deal with calculations. In sum, LLMs require human oversight and occasional correction. We think of them as genius apprentices from the 1500s, but extremely productive, with superb memory and very talkative. Treat them accordingly, and amazing results will follow.

Now, let’s talk about security and IP protection, which are understandably the biggest worries for the corporate adoption of LLMs. We decided to take it seriously from the start, and therefore we conducted an overwhelming majority of our experiments using AskSage – a platform built on top of LLMs which is set up with all technical bells, whistles, and extensive security accreditation to work with the DoD and defense community. In our view, this should be good as good as it gets for the rest of the herd.

Curiously enough, the original script of “Groundhog Day” called for Phil reaching nirvana and thus being released from the loop, with Rita still rejecting him, and therefore going into a loop of her own. While that alternative plot would certainly please some DevOps fanatics, we totally agree with the movie director’s happy-end decision, and team Senticore is looking forward to helping its clients bask in the inevitable, warm LLM spring.